MonoNav:

MAV Navigation via Monocular Depth Estimation and Reconstruction

MAV Navigation via Monocular Depth Estimation and Reconstruction

| Nathaniel Simon | Anirudha Majumdar |

| | |  |

| Best Paper: Learning Robot Super Autonomy Workshop at IROS 2023. |

| In Proceedings: ISER 2023. |

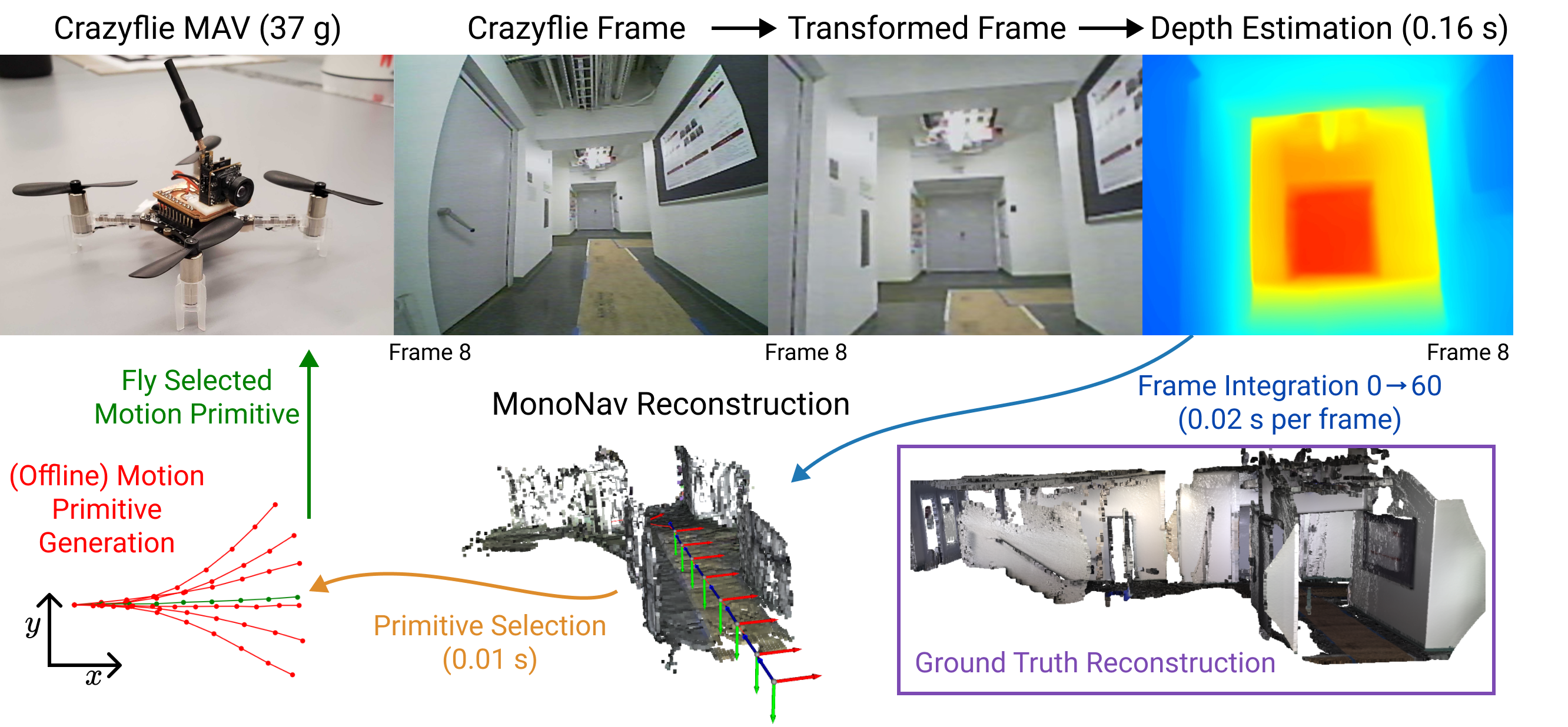

Abstract: A major challenge in deploying the smallest of Micro Aerial Vehicle (MAV) platforms (< 100 g) is their inability to carry sensors that provide high-resolution metric depth information (e.g., LiDAR or stereo cameras). Current systems rely on end-to-end learning or heuristic approaches that directly map images to control inputs, and struggle to fly fast in unknown environments. In this work, we ask the following question: using only a monocular camera, optical odometry, and offboard computation, can we create metrically accurate maps to leverage the powerful path planning and navigation approaches employed by larger state-of-the-art robotic systems to achieve robust autonomy in unknown environments? We present MonoNav: a fast 3D reconstruction and navigation stack for MAVs that leverages recent advances in depth prediction neural networks to enable metrically accurate 3D scene reconstruction from a stream of monocular images and poses. MonoNav uses off-the-shelf pre-trained monocular depth estimation and fusion techniques to construct a map, then searches over motion primitives to plan a collision-free trajectory to the goal. In extensive hardware experiments, we demonstrate how MonoNav enables the Crazyflie (a 37 g MAV) to navigate fast (0.5 m/s) in cluttered indoor environments. We evaluate MonoNav against a state-of-the-art end-to-end approach, and find that the collision rate in navigation is significantly reduced (by a factor of 4). This increased safety comes at the cost of conservatism in terms of a 22% reduction in goal completion.

Try MonoNav!

The MonoNav GitHub Repo includes all of the code for this project, including a demo dataset (monocular images and poses) to run depth estimation, reconstruction, and planning pipelines out of the box (no robot needed).

MonoNav System Overview

MonoNav uses pre-trained depth-estimation networks (ZoeDepth) to convert RGB images into depth estimates, then fuses them into a 3D reconstruction using Open3D. MonoNav then selects from motion primitives to navigate collision-free to a goal position. If the motion primitive selection problem is infeasible (e.g., no motion primitive remains sufficiently far from obstacles), then MonoNav executes a safety maneuver (e.g., stop and land).

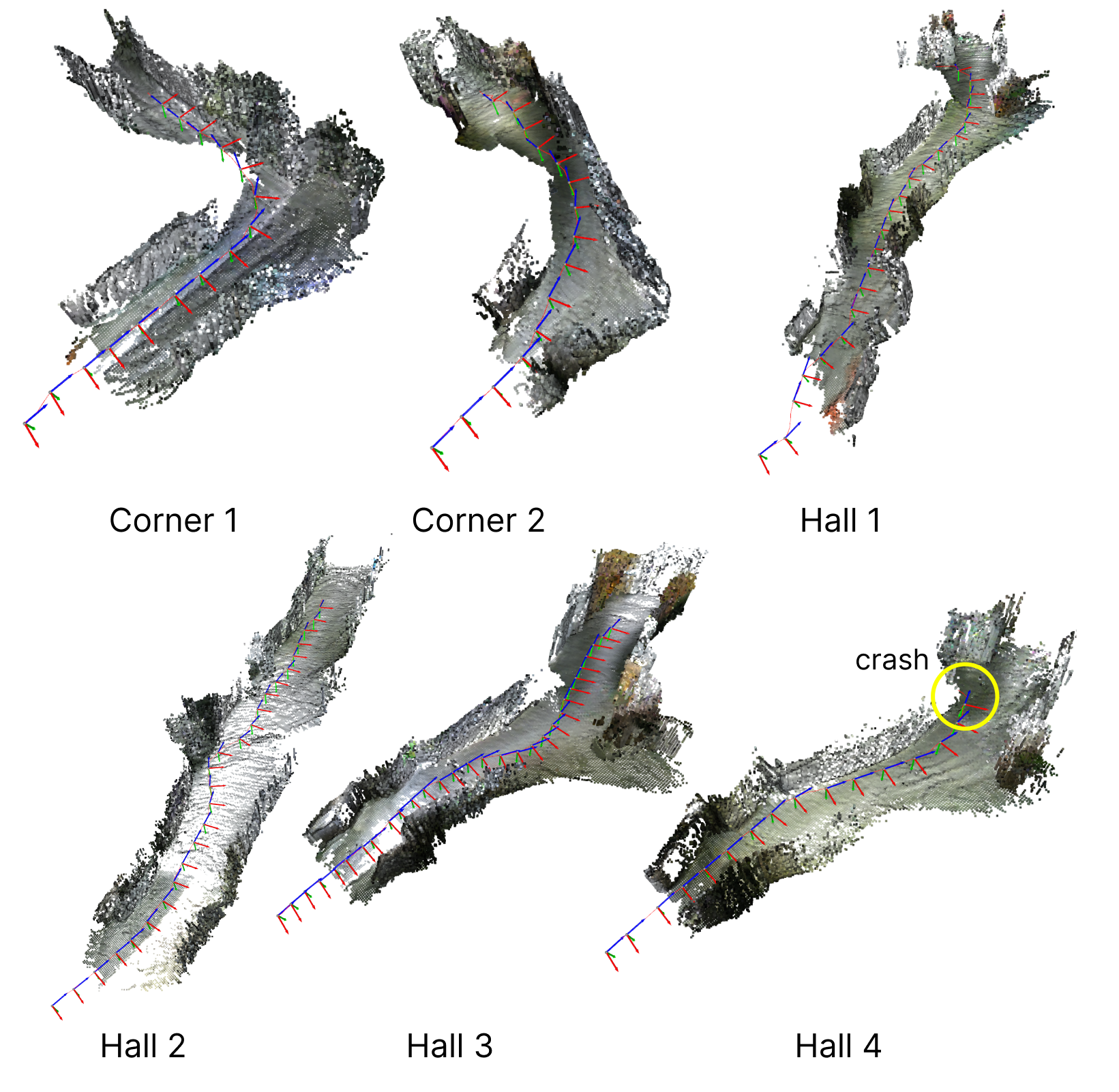

Examples: Reconstruction and Planning

MonoNav is capable of navigating in diverse, constrained indoor environments. Specifically, hallways with corners, curved walls, and furniture. The crash in Hall 4 (bottom right) was due to MonoNav turning into a previously occluded obstacle. This limitation occurs because MonoNav is currently configured to explore aggressively, treating unseen space as free.

Results: Comparison to State of the Art

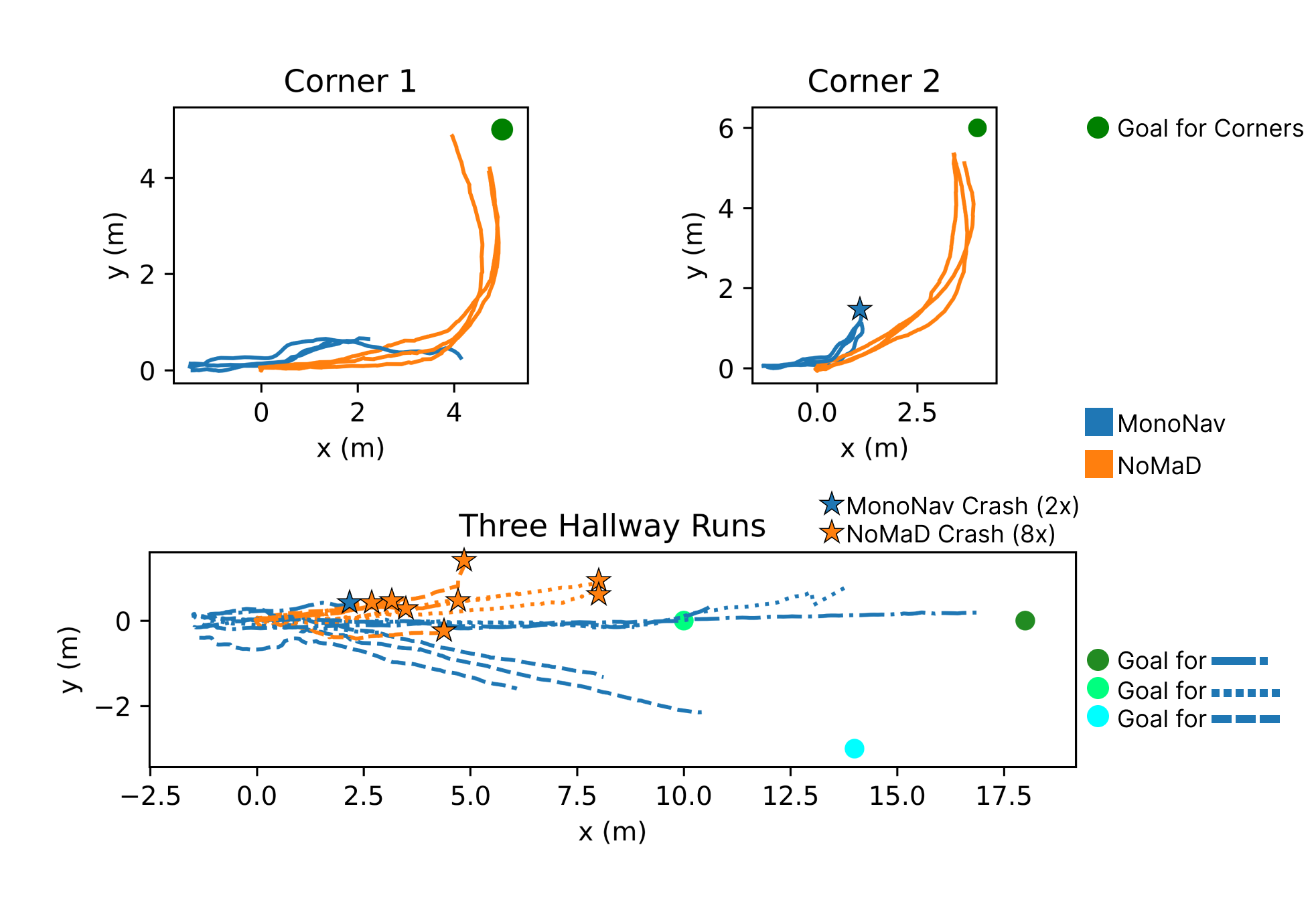

We evaluate MonoNav against NoMaD: Goal Masking Diffusion Policies for Navigation and Exploration, a state of the art approach in monocular navigation. NoMaD uses a transformer encoder and diffusion policy, trained over 100 hours of robot navigation data, to directly output action candidates with optional goal-image conditioning to further refine the action candidates.

We find that NoMaD performs well when a clear maneuver is required (e.g., turn left to avoid a wall), but struggles in settings where more nuanced action candidates are required. For example, in a straight hallway segment, NoMaD produces straight action candidates, which are insufficiently expressive and evasive to avoid collision as the quadrotor drifts into the wall.

In 15 trials in 5 unique environments, we plot the goal positions, trajectories, and crash locations. MonoNav's key advantage is its ability to explicitly reason about scale, which allows self-arresting when an imminent collision is detected. This capability results in a 4x reduction in collisions. This increase in safety comes at the cost of conservatism, as MonoNav has an associated 22% reduction in goal completion.

Bibtex

@inproceedings{simon2023mononav,

title = {MonoNav: MAV Navigation via Monocular Depth Estimation and Reconstruction},

author = {Nathaniel Simon and Anirudha Majumdar},

booktitle = {Symposium on Experimental Robotics (ISER)},

year = {2023},

url = {https://arxiv.org/abs/2311.14100}

}